AIBES 5-Point Friday #2

From "world models" to AI Disney characters - here are 5 things our AI Business Engineers are excited about this week!

Signal Worth Noticing

The center of gravity in AI quietly shifted this week from words to worlds.

Fei-Fei Li (often called the “godmother of AI”) has been pounding the table that current LLMs are “wordsmiths in the dark”—eloquent, but largely blind to physical reality. In a new essay and interviews, she argues that spatial intelligence—AI that understands and reasons about 3D space, physics, and geometry—is the defining challenge of the next decade.

That’s not just theory. Her company World Labs raised around $230M to build “world models” and just launched Marble, which lets users generate editable 3D worlds from text, image, or video prompts and drop them straight engines for producing interactive games, film, and data visualization.

Zoom out and Demis Hassabis is saying the same quiet part out loud: AGI (Artificial General Intelligence) could arrive by 2030, and the next 12 months will be defined by world models—systems that simulate the physical world well enough to predict how it works.

AIBES Tech of the Week

We’ve been hands-on this week with Google Antigravity, the new agent-first AI IDE that launched in public preview just a few weeks ago.

Antigravity is built on a fork of VS Code but flips the center of the experience from “you type, AI helps” to “agents work, you supervise.”

At AIBES, we’re experimenting with Antigravity as a “power tool” alongside our Airdex platform: using it to spin up both service ideas and internal tools while keeping humans firmly in the loop on permissions and reviews. The direction of travel is clear: IDEs are becoming orchestration consoles for swarms of coding agents, not just prettier text editors. Who knows what tool will come out next that changes how we do work!?

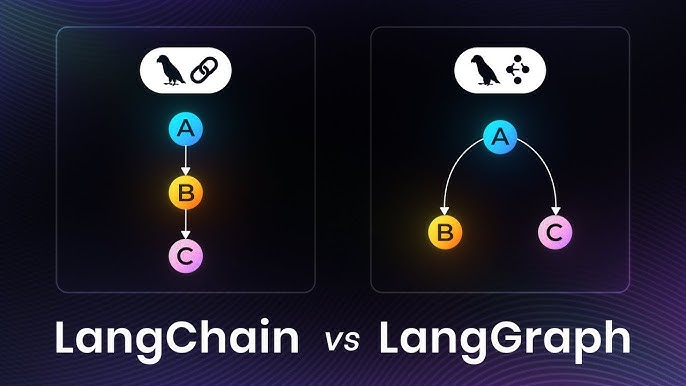

Framework We’re Using

Under the hood, our tech stack is evolving!

LangChain for the building blocks – providing agnostic agent architecture that plays nicely with any major model provider.

LangGraph for the wiring – giving us defined, deterministic workflows: stateful, multi-actor and sometimes cyclic; where different nodes (planner, retriever, scorer, router) can coordinate across long-running tasks.

LangFuse for the x-ray vision – adding even more observability, tracing, evals, and metrics to Airdex as a parent platform.

Expected Result: we’ll see, in one place, if any agents are slow, expensive, or hallucinating, and iterate on them without redeploying the whole platform. Don’t get locked into opaque magic boxes; make your AI stack as observable as the rest of your infrastructure.

Trending News

The big AI headline this week: OpenAI launched GPT-5.2, framed as its “best model yet for everyday professional use” and a direct response to Google’s Gemini 3.

After an internal “code red” earlier this month, OpenAI paused non-core projects to accelerate GPT-5.2, which improves general intelligence, coding, and long-context reasoning—and is specifically tuned for things like spreadsheet creation, presentation building, tool use, and complex multi-step projects.

The other half of the story is Hollywood money: Disney is investing about $1B into OpenAI and signing a three-year licensing deal that lets Sora-style video generators legally use hundreds of characters from Star Wars, Marvel, and Pixar, with some AI-generated clips slated to appear on Disney+.

Quote we’re pondering:

“AI agents will become the primary way we interact with computers in the future. They will be able to understand our needs and preferences, and proactively help us with tasks and decision making.”

- Satya Nadella, CEO of Microsoft